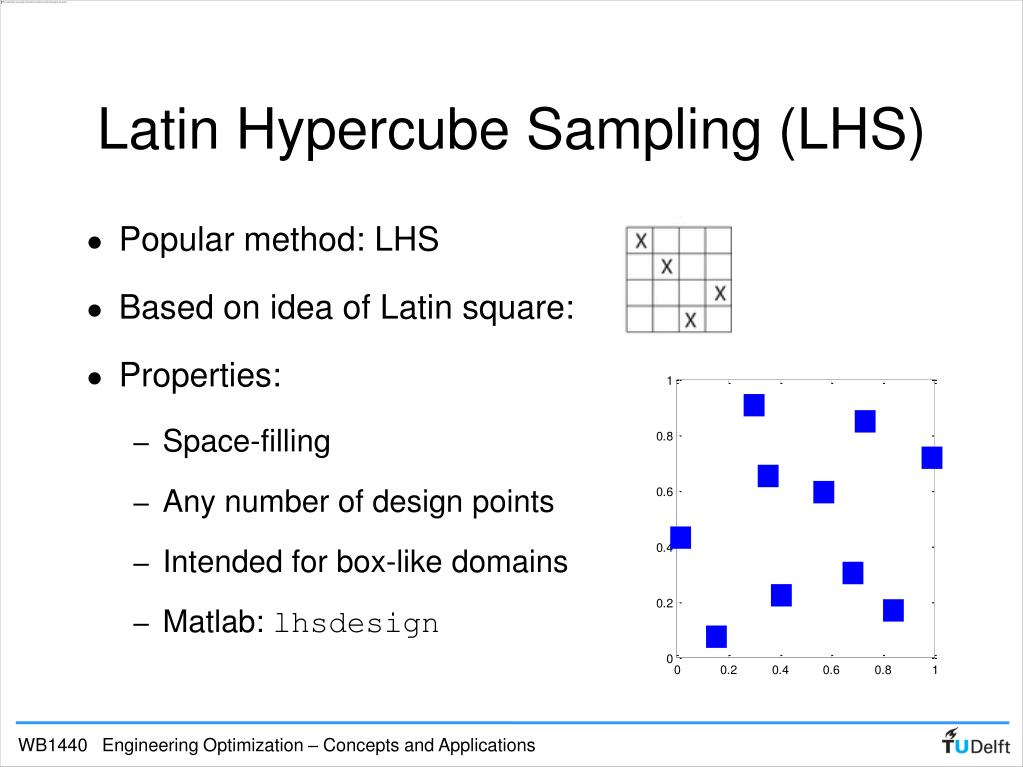

Latin hypercube sampling (LHS) is a statistical method for generating a near-random sample of parameter values from a multidimensional distribution. The sampling method is often used to construct computer experiments or for Monte Carlo integration. LHS was described by Michael McKay of Los Alamos National Laboratory in 1979.Quadrature Methods for the Calculation of Subgrid Microphysics Moments Quadrature Methods for the Calculation of Subgrid Microphysics MomentsIts slower because it requires more > SPICE runs than Solido for 3-sigma (per. The ADE-XL Monte Carlo sampling methods are Random, Latin Hypercube.While a little slower, Latin Hypercube sampling is much more accurate than Monte Carlo sampling.

As a prototypical microphysical formula, Khairoutdinov and Kogan’s autoconversion and accretion formulas are used to illustrate the benefit of using quadrature instead of Monte Carlo or Latin hypercube sampling. E.Latin Hypercube Sampling ensures that each parameter is represented evenly across the entire scenarios, thus resulting in a wide and extensive coverage of the un- certainty space (McKay, Beckman, & Conover, 1979 Kasprzyk, Nataraj, Reed, & Lempert, 2013).Many cloud microphysical processes occur on a much smaller scale than a typical numerical grid box can resolve. In such cases, a probability density function (PDF) can act as a proxy for subgrid variability in these microphysical processes. This method is known as the assumed PDF method. By placing a density on the microphysical fields, one can use samples from this density to estimate microphysics averages.

In practice, this behaviour doesn't actually matter. I agree with the answer by R Carnell, there is no upper bound on the number of parameters/dimensions for which LHS is proven to be effective, though in many settings I've noticed that the relative benefits of LHS compared to simple random sampling tend to decrease as the number of dimensions increases. This paper proposes using deterministic quadrature methods instead of traditional random sampling approaches to compute the microphysics statistical moments for the assumed PDF method. For smooth functions, the quadrature-based methods can achieve much greater accuracy with fewer samples by choosing tailored quadrature points and weights instead of random samples.

A LHS of size $n > 1$ has variance in the non-additive estimator less than or equal to a simple random sample of size $(n-1)$. See here from the accepted answer, and also Stein 1987 and Owen 1997.For non-additive functions, the Latin hypercube sample may still provide benefit, but it is less certain to provide benefit in all cases. The conclusions in the literature are clear:For estimating the variance in functions which are "additive" in the margins of the Latin hypercube, then the variance in the estimate of the function is always less than the equivalent sample size of simple random sample, regardless of the number of dimensions and regardless of sample size. If you read the chapter cited by the accepted answer here, they talk about effectiveness of variance reduction or efficiency being measured relative to some base algorithm like simple random sampling. The plots they showed were the confidence intervals for the mean of their cost function with increasing sample size for 1 dimension and 2 dimension.

There is no upper bound in dimensions for which LHS is proven to be effective.

0 kommentar(er)

0 kommentar(er)